This is our true common heritage: stories faithful not to what people see but to what people dream.

He lived and had a good time, stood on the barricades, fought in duels, was taken to court, chartered boats, paid pensions out of his own pocket, loved, ate, drank, earned ten million and squandered twenty, and died gently in his sleep, like a child.

They all wound, he read. The last one kills.

I am perhaps disqualified from really opining on the quality of The Club Dumas, given that I’ve never actually read The Three Musketeers. [1] And so much of the novelistic winking and nudging went — if not over my head, because the narrator and characters bent over backwards to make sure I understood the implications and allusions — a little underappreciated, as if pulling on a nostalgia that wasn’t quite there.

This was, in many ways, a guilty pleasure read. If you give me a literary detective story with some murders and references to bygone writers — I can’t help it, it’s like a more pretentious Da Vinci Code, it is candy to me.

And, like candy, there are unsavory ingredients that coat your mouth once the initial sugar rush is wiped away. It’s convenient to lampshade lazy devices like “one-dimensional femme fatale blonde” and “red herring narrator mastermind” and “Mary Sue female companion” as homages to the books this novel is lovingly aping, but so much of the novel’s progression feels flat and uninspired once you remove that single coat of postmodern paint. Pérez-Reverte’s Corso is a blend of every hard-boiled Sam Spade knockoff, a sort of Johnny Walker Red of noir fiction.

That being said, it’s hard for a book like this to end satisfyingly — and I think The Club Dumas pulls it off, and performs the rare trick where the mystery is less interesting than its solution. [2] And what an ending scene — and ending line!:

Everyone gets the devil he deserves.

It can be said, to this book’s enduring credit, that it inspired me to do so at some point this year. ↩︎

I’m not sure whether to call it a rejection or affirmation of postmodernism, but the ‘reveal’ that the two stories were entirely distinct and Corso’s mistake was thinking they were intertwined was a neat one, and I think says more about our participation in the books we love than the actual pastiche elements. ↩︎

Highlights

They all wound, he read. The last one kills.

He lived and had a good time, stood on the barricades, fought in duels, was taken to court, chartered boats, paid pensions out of his own pocket, loved, ate, drank, earned ten million and squandered twenty, and died gently in his sleep, like a child.

This is our true common heritage: stories faithful not to what people see but to what people dream.

One of my biggest complaints with Blue Eye Samurai (a show that I liked and enjoyed overall) was that in its fence-straddling between wanting to tell a meaningful, stand-alone story and set itself up for a second season of East-meets-West bushido misadventures, it not only failed to actually provide a meaningful ending but in its failure it threw away its protagonist's character arc.

That is — not quite the case with Sugar, but Sugar's flaws lie in the fact that, six episodes into an eight-episode noir series, it hard pivots into science fiction.

Lots of hay can be made about the pivot itself — it was, I think, telegraphed artfully, and shows a level of outré experimentation that you don't often find on Apple TV.

My complaint is more that this pivot is less a new texture on what was, for me, a really entertaining noir (a very faithfully executed Chinatown-esque story, filled to the brim with metatextual film references and a Colin Farrell performance that cements him as one of my favorite lead actors working right now!) and more "okay, that noir stuff is fun, time to put it to the side and talk about this new plot point instead."

The catharsis in any detective story, noir or otherwise, is the (literal or otherwise) parlor room scene, when you get to piece together all the little scraps and hints and loose plot threads and cohere them into a satisfying gestalt. Quite literally and deliberately, that never happens in Sugar — Sugar, having failed as a detective, is given (by the person who turns out to be the mastermind, in a plot point that again makes little sense except for as fuel for Season 2) the address of the person who he's trying to find.

What are we meant to take away from this show? What message does it try to impart, besides "Colin Farrell driving around in L.A. is cool as hell" and "humanity can be corrupting to aliens" [1]? Moreover — why does the hard pivot need to exist?

The most obvious answer I can think of is — to justify multiple seasons of the show, which is reasonable enough. But this could have existed as a straightforward six-episode miniseries and it would have been one of my favorite pieces of media ever, and I'm salty that they decided to err on the side of bombast.

I know, I know, that's not exactly a charitable way to phrase that. ↩︎

Buried in a snarky thread about why Google Calendar doesn’t support calendar syncing is a long, detailed explanation of why shipping this sort of thing is hard:

- Designing a consent experience for the enterprise admin and enterprise end user

- Creating a special "read only" object type that's only populated via one-way sync from a consumer account. It's not the same as a free/busy share of an entire calendar or an event set to private visibility as those have slightly different semantics. The most analagous concept is the read-only events that come from e.g. OpenTable events, but even those are a bit different from what you'd want here. Make sure this object type is rendered properly across all clients.

- Making sure that object is rendered correctly and can't be modified from the enterprise account on any native clients to preserve the one-way sync that the source of truth is the consumer account.

- Ensuring that edits made from the enterprise account in 3P clients (like Apple Calendar) are correctly dropped. Same with legacy native apps that can't be update.

- Ensuring that the API correctly represents this new object type and drops edits made from enterprise accounts. Note: read only events are super hard when you have an ecosystem of 3P clients and an API that may not respect your readonly designation.

- Figuring out how to handle this object type for legal retention. Should it appear in Vault? No real precedent, tricky policy question.

- Can an admin modify or delete this object as they can with all other data in the enterprise account? No real precedent, tricky policy question.

- If the consumer account is deleted, account for the different wipeout period of the enterprise data.

- Make sure this event type is correctly represented in all cross-product features, e.g. the warning in Google Chat that someone is in a meeting.

- Think through a whole bunch of tricky edge cases: e.g. consumer account is deleted but a legal action requires access to the data for some reason and it exists in the audit logs of the enterprise, what happens?

Of course you can skip several of these steps and get dunked on by folks on twitter when the product looks incomplete or broken (or much worse regulators or enterprise clients).

There are two things this jeremiad brings to mind (three, if you count how satisfying and thereapeutic it must have been to write it):

First: it’s always harder than you expect to build something once you’re at a significantly large size. I am guilty just like anyone else of forgetting this fact.

The advent of PLG and VC-subsidized growth means that more products are truly horizontal than ever before — the median company now is much more likely to serve both a pre-revenue startup and a Fortune 500 company than at any previous point in time, and such companies tend to contend, as a meaningful design principle, that the same product surfaces should scale gracefully with the size of the customer using it.

I called this the “Lyft problem” while at Stripe. “Why don’t you guys just ship a more granular MRR pivot table? There’s not really that much data for the average merchant!” “Yeah, well, what about Lyft.” [1]

Second: the job of “deciding what to build” is so radically different as a line-level PM embedded in a FAANG compared to being at a relatively small (<1000 HC) company. Your goal as an individual PM within a Borgish company is largely to find areas of tactical leverage, align stakeholders (lol, I know, but really), and properly balance short-term and long-term investments. These are difficult things to do that are not just underweighted but non-existent in different kinds of companies. [2]

None of this is to say that Google shouldn’t support this use case — they should, and I find it annoying that they don’t!

But I get frustrated with people whose thinking about why a company simply hasn’t shipped X stems from an assumption that they don’t care, or they are ignorant, or they are incompetent.

Administrative capacity and institutional agility are boring topics, but they’re what actually matter.

This is also why I am increasingly confident that ignoring the enterprise is a tenable, reasonable strategy for certain companies: you are sacrificing revenue for agility in a way that may make huge amounts of long-term sense. ↩︎

The inverse is of course true as well: the best startup PMs are thrust into a position not dissimilar to an RTS, where all options are theoretically viable and your goal is to rapidly recalibrate and triage where you’re allocating your capital and labor. This is something that Microsoft does not really prepare you for. ↩︎

I wanted to get a commit that was temporally some distance back as part of my experimentation with git cliff. This took some time to do, but here's what I ended up with:

git log --since="72 hours ago" --until="now" --reverse --pretty=format:"%h" | head -1Nothing particularly fancy, but I hope it's useful!

An underwhelming and inert repetition of her previous few albums, and the good individual singles (Chihiro!) do not make this any more substantial as an album-sized artifact than as another graft onto the larger, increasingly monotonic discography.

- By far the single most-fruitful tactic has been "just look at raw GET responses from Mastodon and see what things are shaped like." I know that "ActivityPub is under-specified" is a bit of a meme, but it's wild how little prior art there is.

- Something that gives distinctly bad vibes:

ostatus.org(which ostensibly describes a bunch of the schemae for subscription interactions) now redirects to a gambling site. See more. - Webfinger is not part of ActivityPub per ce, but it makes sense as an initial beachhead.

- Here's a fun, interesting, vaguely audacious top-level goal: every subscription form on the web should take an ActivityPub username as well as an email address. Ghost has this in their mocks as well, and I don't think it's technically that complex — Hugh Rundle walks through the steps but, if my understanding is correct, he unnecessarily complicates things a little bit. All we really need to do is hit webfinger and then redirect. (Maybe a higher-level question: how do you determine if

[email protected]is an email address or an AP username?) - This screed is a pretty interesting bear case on the scalability of ActivityPub. I am not super worried about the implications here — I get and agree with where the author is coming from, but as an implementer this feels like a "cross this bridge when I get to it" situation.

But as many first-time fathers had realized in the delivery room, there was something about the sight of an actual baby that focused the mind. In a world of abstractions, nothing was more concrete than a baby.

“There's only zero of you,” said the Queen of the Ants. In ant arithmetic, there are only two numbers: Zero, which means anything less than a million, and Some. “You can't cooperate, so even if you were King, the title would be meaningless.”

Hackworth was a forger, Dr. X was a honer. The distinction was at least as old as the digital computer. Forgers created a new technology and then forged on to the next project, having explored only the outlines of its potential. Honers got less respect because they appeared to sit still technologically, playing around with systems that were no longer start, hacking them for all they were worth, getting them to do things the forgers had never envisioned.

“Yet how am I to cultivate the persons of the barbarians for whom I have perversely been given responsibility?" … "The Master stated in his Great Learning that the extension of knowledge was the root of all other virtues."

Internal tools and small, well-scoped projects are a great avenue to tinker with technologies on the periphery of your understanding, and a Third South project has led me to spin up a small Next project using Bun [1] and Auth.js (nee next-auth), which has been quite bad and I think successfully dissuaded me from using it in any more serious endeavor.

Auth.js’s plugin-based provider system is a double-edged sword: when it works, the code foodprint is lovely and small, but when it doesn’t work you get obscure, typo-riddled errors and no easy way to introspect what’s actually happening. This issue is exacerbated by the fact that the most recent change (and concomitant rebrand) significantly changed the provider API, and providers within the core repository are, at the time of this writing, still broken by those changes.

But! I am not here to rag on Auth.js! I am here to share a working Square provider implementation. This is an evolution of this example I found, which is directionally helpful but on an older version of the API. Here you go:

const CREDENTIALS = {

// Replace `clientId` and `clientSecret` with your own.

clientId: "clientId",

clientSecret: "clientSecret",

};

// This is for sandbox environment. For production, use `https://connect.squareup.com`.

const BASE_URL = "https://connect.squareupsandbox.com";

const CONFIG = {

id: "square",

name: "Square",

// This disables the CSRF check for the provider, is completely undocumented

// by the 'making your own provider' guide, and is responsible for me losing

// 3 hours of my life. I hope you enjoy it.

checks: ["none"],

// Pass in the `client_secret` in the request body.

client: { token_endpoint_auth_method: "client_secret_post" },

type: "oauth",

authorization: {

url: `${BASE_URL}/oauth2/authorize`,

params: {

// Replace the `scope` with the permissions you need.

scope: "MERCHANT_PROFILE_READ,ORDERS_READ,ORDERS_WRITE,PAYMENTS_WRITE",

clientId: CREDENTIALS.clientId,

session: false,

},

},

url: `${BASE_URL}/oauth2`,

token: `${BASE_URL}/oauth2/token`,

userinfo: `${BASE_URL}/v2/merchants`,

// You could do with better typing here, but that's left as an exercise to the reader.

profile: (profile: any) => {

const unwrappedProfile = profile.merchant[0];

return {

id: unwrappedProfile.id,

name: unwrappedProfile.business_name,

email: unwrappedProfile.owner_email,

};

},

...CREDENTIALS,

};

export default CONFIG;Which has, thus far, been so easy and banal to use it warrants no further discussion ↩︎

This weekend marks the three month mark since spending an international flight migrating this blog to 11ty.

I think in many ways the true metric by which to gauge the success of a given blogging engine is how much time you spend writing and publishing content relative to how much time you spend futzing with the various knobs, whistles, and templates instead of actually writing.

By this metric, the 11ty migration is a roaring success. My hope was that it would lower the level of friction required to publish both longer-form essays and shorter-form snippets and it would be more futureproof than the previous iteration of my site, which was on a slow and rickety stack of Next and MDX [1]; I've published more writing in the past three months than in any such prior period of my life.

And in those three months I've barely had to mess with 11ty itself at all.

(My source for this site is public, btw.)

Both of which I love to varying degrees, but were very much the wrong fit for my site! ↩︎

It's not quite interesting or noteworthy enough to warrant a full-on essay, but yesterday we unshipped the last remaining Invoke commands and ported them over to just.

I think Invoke is a good, cool project, and I wish it well. If you're at the precise intersection of "you have shell commands that need to be encapsulated in some source of truth", "those shell commands need imperative logic", and "your application is pure Python", I think it's the right tool for the job — but we were already using just for every other part of the monorepo, and some parts of the core Django application, and it felt vestigial to hold onto some tasks.py that was out of step with the rest of the commands that you needed to run.

It's also emotionally much easier to decide to stop using a project when the latest commit is from six months ago. This is, of course, a terrible way to judge projects' efficacy — things can and should be finished! — but when I'm on the fence about something like this I tend to go with the approach that clearly has more current emphasis behind it.

It's good — better than I expected, worse than it could have been, useful all the same. I learned a good amount, such as the terminology of grace notes and the fact that the only reason EMP survived the financial crisis was because the parent company also owned Shake Shack. (There is an entire essay to be written about how Shake Shack and EMP represent a well-rounded barbell.)

Two-thirds through the book, I was ready to criticize it for being a bildungsroman without any conflict or pain: Guidaras choppy, anecdote-first style of writing makes the narrative feel like minor rising action after minor rising action, laudable breakthrough after laudable breakthrough. This was amended well with the book's climax, and the story of EMP having to shed a lot of its skin and weight to make it over the mountaintop.

Would I enjoy this book as much if I didn't love The Bear (or wasn't quite as obsessed with cuisine as a metaphor for software)? Probably not, but there are worse books in this genre, and I think it's worth your time.

I'm not loving Unreasonable Hospitality, but it did supply me with a phrase that I've been looking for:

Eventually, that gesture became one of our steps of service. The host would ask guests, “How’d you get here tonight?” If they responded, “Oh, we drove,” he’d follow up with, “Cool” Where’d you park?” If they told him they were by a meter on the street, he asked which car was theirs so one of us could run out and drop a couple of quarters into the box while they are dining. This gesture was the definition of a grace note, a sweet but nonessential addition to your experience. It was an act of hospitality that didn’t even take place within the walls of the restaurant! But this simple gift – worth fifty cents – blew people’s minds.

I think we've correctly learned to rail against unnecessary visual flourishes within SaaS — the much-derided SVG confetti craze of 2019 springs to mind — but that doesn't mean there aren't room for grace notes, nice little microinteractions that make the user's life a little bit easier. Sniper links are an example that I am obviously quite proud of; there are many others, things where going the extra mile might not make sense from a prioritization perspective but are the things that, over time, can make the aggregate userbase much happier in a "warm and fuzzy" way that transcends NPS.

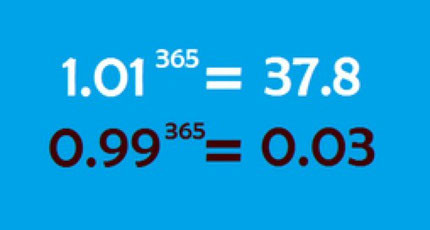

If you spend enough time digesting hackneyed business or self-improvement advice, you've probably seen someone wax poetic about the following image:

This is meant to be an illustration of the power of incrementalism (often referred to as kaizen when the writer is trying to be particularly obscure/profound) [1]. Get 1% better every single day, and then a year later you're one and a half orders of magnitude better!

This is a very lovely concept, and I like it in the abstract. The thing that I butt into every time I see it deployed is that getting 1% better is actually quite hard once the thing is a non-trivial size.

In general, it's a pretty useful practice to napkin-math out the impacts of various initiatives, but one impact doing so has is that you realize how hard it is to hit 1% from object-level work:

- Fix a few game-breaking edge cases in a project that 10% of your paid customer base uses, and only 3% of those customers have ever run into the bug? Oh, and your paid customer base is only a 20% subset of your free tier? Oof — only 0.06%.

- Improve TTFB on a specific page for prospects based in Japan? Well, only 5% of your incoming prospects are coming in from Japan, and all that TTFB did was lift conversion from 5% to 5.2%...

You get the idea.

This isn't to argue against that work — that kind of incremental work is my favorite, and I'm the happiest when I get to point to small object-level improvements that make a real difference. But it's important not to overstate their efficacy.

Instead, I argue three things:

- Devote outsized amounts of incremental work to the most well-paved parts of your product. Focusing on grace notes for a nascent SKU with only a couple dozen MAUs makes less sense than on the tentpole part of your app.

- Be really, really cautious when making product or strategy decisions that exclude portions of your userbase. Gating a really compelling feature behind a specific price point might make sense in the short term to drive expansion revenue, but makes it much harder to justify additional work against that feature because a very small subset of users will glean the results. [2]

- Shoot for

.1%better instead.1.001 ^ 365only gives you1.44— not quite as gee-whiz astounding — but 44% per annum is a pretty damn good return, plus whatever big roofshot projects you end up taking on as well.

Let's be clear: I am the guiltiest of them all when it comes to this. ↩︎

For the maximally obvious example of this behavior, consider: every single PE-acquired SaaS which has changed branding and marketing pages three times in the past five years without shipping a single new feature to the app dashboard. ↩︎

I’m increasingly convinced that for developer-first tools, a really good docs experience is a durable, non-trivial advantage.

Part of this thesis is that Really Good Docs Experiences, in addition to having great information architecture and strong writing/prose, should be thought of less as ancillary content repositories that can be farmed out to whatever your helpdesk software is and more as important web-apps in their own right.

Every now and then, when a docs site does something cool I try to figure out how they do it. Take shadcn/ui, for instance — how are all of these previews being generated based on Typescript? It’d be one thing if the components were HTML — you can just throw them into an iFrame — but clearly there’s something more complicated going on here.

Blessedly, these docs are open source, so I can find out exactly how. Let’s take that example Notifications card for example:

That page is being rendered in MDX; there’s a generic ComponentPreview component rendering it:

<ComponentPreview

name="card-demo"

description="A card showing notifications settings."

/>It looks like that ComponentPreview component is just proxying out to some big __registry__:

import { Icons } from "@/components/icons";

// ...

const Preview = React.useMemo(() => {

const Component = Index[config.style][name]?.component;

if (!Component) {

return (

<p className="text-sm text-muted-foreground">

Component{" "}

<code className="relative rounded bg-muted px-[0.3rem] py-[0.2rem] font-mono text-sm">

{name}

</code>{" "}

not found in registry.

</p>

);

}

return <Component />;

}, [name, config.style]);The component in the registry is right here, and that’s the exact source code that we see. But how is the registry aware of its constituents?

Because it’s autogenerated! There’s a scripts/build-registry.ts file. This build file is gnarly (understandably so), but at a high level it spits out this massive export file:

"card-demo": {

name: "card-demo",

type: "components:example",

registryDependencies: ["card","button","switch"],

component: React.lazy(() => import("@/registry/default/example/card-demo")),

source: "",

files: ["registry/default/example/card-demo.tsx"],

category: "undefined",

subcategory: "undefined",

chunks: []

},And it finds the things to populate in that registry via a manually-enumerated list of potential components:

{

name: "card-demo",

type: "components:example",

registryDependencies: ["card", "button", "switch"],

files: ["example/card-demo.tsx"],

},So, in sum, walking our way back to the final artifact:

shadcnhas a big, manually-created list of example files (and associated dependencies/metadata.)- A build-time script analyzes that list and autogenerates both an augmented code snippet for that example file and a big index that allows the site to import that snippet live.

- A previewer component pulls in that autogenned snippet and its raw-source equivalent.

- That previewer component is declared by an

mdxfile.

Nothing magical; nothing complex. But certainly bespoke.