When we added support for complex filtering in Buttondown, I spent a long time trying to come up with a schema for filters that felt sufficiently ergonomic and future-proof. I had a few constraints, all of which were reasonable:

- It needed to be JSON-serializable, and trivially parsable by both the front-end and back-end.

- It needed to be arbitrarily extendible across a number of domains (you could filter subscribers, but also you might want to filter emails or other models.)

- It needed to be able to handle both and and or logic (folks tagged foo and bar as well as folded tagged foo or bar).

- It needed to handle nested logic (folks tagged foo and folks tagged bar or baz.)

The solution I landed upon is not, I’m sure, a novel one, but googling “recursive filter schema” was unsuccessful and I am really happy with the result so here it is in case you need something like this:

@dataclass

class FilterGroup:

filters: list[Filter]

groups: list[FilterGroup]

predicate: "and" | "or"

@dataclass

class Filter:

field: str

operator: "less_than" | "greater_than" | "equals" | "not_equals" | "contains" | "not_contains"

value: str

And there you have it. Simple, easily serializable/type-safe, can handle everything you throw at it.

For example, a filter for all folks younger than 18 or older than 60 and retired:

FilterGroup(

predicate="or",

filters=[

Field(

field="age",

operator="less_than",

value="18"

)

],

groups=[

FilterGroup(

predicate="and",

filters=[

Field(

field="age",

operator="greater_than",

value="60"

),

Field(

field="status",

operator="equals",

value="retired"

)

]

groups=[],

)

]

)

“Making art was really about the problem of the soul, of losing it. It was a technique for inhabiting the world. For not dissolving into it.”

All you can do is involve yourself totally in your own life, your own moment, Lonzi said. And when we feel pessimism crouching on our shoulders like a stinking vulture, he said, we banish it, we smother it with optimism. We want, and our want kills doom.

I (unlike much of the NYRB cognoscenti, apparently) really enjoyed Creation Lake last fall and resolved to explore more of Kushner's work — a resolution which led me to The Flamethrowers, her debut in spirit if not in fact, a book which threw her into a bit of a national spotlight as an Important Vital Female Writer.

Perhaps with a decade of hindsight, it is easy to understand why this book — and Kushner's style — was so hotly discussed. This book has the whiff of a high-budget prestige post-Mad Men series: big ideas, a smorgasboard of perspectives (literal and figurative), set pieces galore, a period piece that feels very much in conversation with What Is Going On Today, writing that is interesting and dense. It is hard not to come away from The Flamethrowers with a strong conviction that Kushner is very smart and very interesting and has a lot to say.

And yet. Perhaps it is indicative more of the literary movement of the past decade more than of this novel, but: using 1970's New York as a backdrop for discussions of broader societal issues feels old hat and, moreover, boring. Skewering countercultural art scions as largely bourgeoisie sons of bourgeoisie parents is old hat and, moreover, boring. Listening to Harper's essays about the relationship between art and performance and reality, thinly rewritten to be monologues from thinly-written characters is old hat and, moreover, boring.

This is a shame because when Kushner leaves the relative comfort of the framing device of urban-flight New York and explores more interesting points of view (post-war Italy; the Bonneville flats) the book comes alive. She is witty and idiosyncratic and — if not mean, cutting in a way that makes her writing feel so vivacious in a way that Cusk and others in her vaguely-modernist cohort feel lacking in my esteem. There are images of real beauty in The Flamethrowers; it is also very clearly a work from a writer who has not learned to carve herself lean, and I think that's where Creation Lake proves itself the stronger work.

If there's been one through line in changes to Buttondown's architecture over the past six months or so, it's been the removal and consolidation of dependencies: on the front-end, back-end, and in paid services. I built our own very spartan version of Metabase, Notion, and Storybook; we vended a half-dozen or so Django packages that were not worth the overhead of pulling from PyPI (and rewrote another half-dozen or so, which we will open-source in due time); we ripped out c3, our visualization library, and built our own; we ripped out vuedraggable and a headlessui and a slew more of otherwise-underwhelming frontend packages in favor of purpose-built (faster, smaller, less-flexible) versions.

There are a few reasons for this:

- Both Buttondown as an application and I as a developer have now been around long enough to be scarred by big ecosystem changes. Python has gone through both the 2.x to 3.x transition and, more recently, the untyped to typed transition; Vue has gone from 2.x to 3.x. The academic problem of "what happens if this language completely changes?" is no longer academic, and packages that we installed back in 2018 slowly succumbed to bitrot.

- It's more obvious to me now than a few years ago that pulling in dependencies incurs a non-trivial learning cost for folks paratrooping into the codebase. A wrapper library around

fetch might be marginally easier to invoke once you get used to it, but it's a meaningful bump in the learning curve to adapt to it for the first time.

- It is easier than ever to build 60% of a tool, which is problematic in many respects but useful if you know exactly which 60% you care about. (Internal tools like Storybook or Metabase are great examples of this. It was a fun and trivial exercise to get Claude to build a tool that did everything I wanted Metabase to do, and save me $120/mo in the process.)

We still use a lot of very heavy, very complex stuff that we're very happy with. Our editor sits on top of tiptap (and therefore ProseMirror); we use marked and turndown liberally, because they're fast and robust. On the Python side, our number of non-infrastructural packages is smaller but still meaningful (beautifulsoup, for instance, and django-allauth / django-anymail which are both worth their weight in gold). But the bar for pulling in a small dependency is much higher than it was, say, twelve months ago.

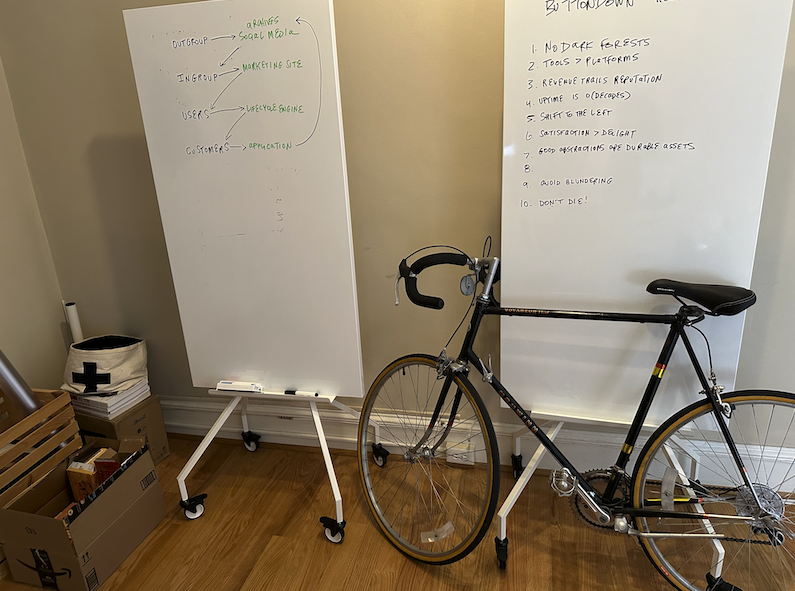

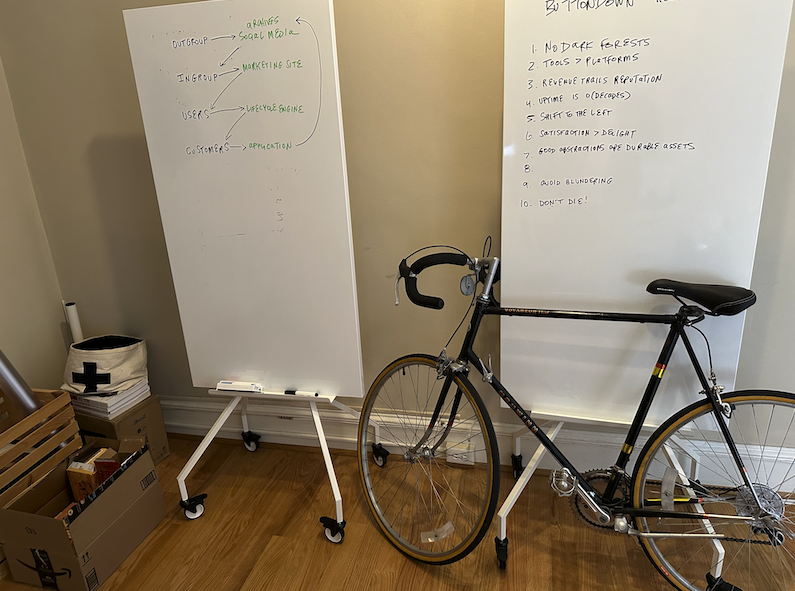

After many wonderful years of working out of my home office (see Workspaces), I've now "expanded" into an office of my own. 406 W Franklin St #201 is now the Richmond-area headquarters of Buttondown. Send me gifts!

The move is a bittersweet one; it was a great joy to be so close to Haley and Lucy (and, of course, Telly), and the flexibility of being able to hop off a call and then take the dog for a walk or hold Lucy for a while was very, very nice.

At the same time, for the first time in my life that flexibility has become a little bit of a burden! It turns out it is very hard to concentrate on responding to emails when your alternative is to play with your daughter giggling in the adjoining room; similarly, as Buttondown grows and as more and more of my time is spent on calls, it turns out long-winded demos and onboarding calls are logistically trickier when it is Nap Time a scant six feet away. And, beyond that, it's felt harder and harder to turn my brain off for the day: when there is always more work to be done, it's hard not to poke away at a stubborn pull request or jot down some strategy notes instead of being more present for my family (or even for myself, in a non-work capacity.)

So, I leased an office. The space is pretty cool: it's downtown in the sweet spot of a little more than a mile away from the house: trivially walkable (or bikeable, as the above photo suggests) but far enough away to give me a good bit of mental space. The building is an old manor (turned dormitory, turned office building). I've got a bay window with plenty of light but no views; I've got a nice ethernet connection and a Mac Mini with very few things installed; I've got a big Ikea desk and a printer; I've got an alarm on my phone for 4:50pm, informing me that it's time to go home, where my world becomes once again lively and lovely, full of noise and joy and laughter.

I love this bit from Paul Graham on pattern-matching founders:

Though the most successful founders are usually good people, they tend to have a piratical gleam in their eye. They're not Goody Two-Shoes type good. Morally, they care about getting the big questions right, but not about observing proprieties. That's why I'd use the word naughty rather than evil. They delight in breaking rules, but not rules that matter. This quality may be redundant though; it may be implied by imagination.

I love this not because I agree with the sentiment, and in fact I think you can point to a lot of Icarian tendencies (and perhaps pervasive industry-wide rot) as germinating in this naughtiness, but because it is a specific and opinionated characteristic — as opposed to, like, "determined!" and "smart!" and "driven!" It's novel, it's a characteristic with a viewpoint around which reasonable people can agree/disagree.

Antimetal is not a YC company, but it certainly embodies naughtiness. Its founder made a large hullabaloo about trying to commission "the highest quality publicly available version" of the Facebook Red Book, but of course couldn't resist a tiny act of digital vandalism by inserting its own branding into the scan.

Does this matter, on a grand scale? Is this an evil act? Probably not, but certainly a naughty one.

Buttondown in 2025 has reached a sort of escape velocity, less in terms of growth per ce (though also that!) and more in terms of the median user being very far way from my orbit: these users are less technical and more wary than the ones I am used to onboarding.

Users of new tools — especially tools that must be entrusted with important data — are wary these days. They're wary of pivots to video, of shifting business models and sudden price hikes and emails announcing that the curtain is coming down this time next week. It is unfortunate that this wariness — a kind of cynicism — is not only pervasive but entirely rational. Anyone who has used anything new over the past few years has a high number of since-shuttered apps that they trusted with their time and money and data and energy, only to be rewarded with an "Our Incredible Journey" email.

At a high level, I think this stems from the same vein as naughtiness: a tendency to think of systems and expectations as something to be overcome, as social contracts as a thing to be voided or ignored rather than bolstered.

We get a lot of questions that boil down to "why should I trust [Buttondown]?" The blithe answer — the one that I generally try not to give, even though I think it's the most rigorous and correct one — is that, well, you shouldn't — insofaras you should only trust any company as much as you can exfiltrate your data. We've made a lot of decisions in service of decades-long continuity; we're cash-flow positive, we're stable and robust; our incentives are aligned with yours. But, more than that, the email space is novel in that you can always pack up your entire dataset — archives, addresses, et al — and ship them off to a competitor. You shouldn't need to trust us; you should find us valuable enough to be worth keeping around.

Email is also unique in that it's, by software standards, a very mature industry — one with a long history already. Many of my customers come with data exports from tools that they started using fifteen years ago; prospects who I reached out to in 2019 follow up in 2025.

After twelve months of active usage, we ask every paying customer a single question: "why are you still using Buttondown?" There are two answers whose volume dwarf the rest:

- Because the customer support is really good.

- Because I haven't had an experience that has prompted me to look elsewhere.

Customer goodwill is a real asset; it is one that will probably become more valuable over the next decade, as other software-shaped assets start to become devalued. It feels almost anodyne to say "it is in a company's best interest to do right by their customers", but our low churn and high unpaid growth in a space uniquely defined by lack of vendor lock-in is perhaps a sign that being nice is an undervalued strategy. And "being nice" in a meaningful sense is, like "being naughty", something that gets baked into an organization's culture very early and very deeply.

There are a handful of brilliant-yet-relatively-obscure works whose relative obscurity I ascribe almost entirely to the vagaries of chance, or at least "chance" in the way we think of, say, inclement weather. A sixty percent chance of snow is not 60% as defined like a constant in a program, but as an artifact of hundreds of thousands of variables and interactions that are impossible to understand or internalize in any systemic and coherent way. (Happy Endings, my favorite sitcom of all time, is a show that — if you replayed the universe ten times over — would have become a smash hit at least once or twice, but the dice just never quite landed on the right number.)

And it is partially through this lens that I find Pantheon so interesting, at least at a meta-textual level. But first, let's talk about the show itself.

Pantheon is a terrific show, screaming right out of the gate with a competence and ambition that I am shocked I had never heard of it before, and shocked still that it's not an ur-text for science fiction fans everywhere. Developed from a Ken Liu series of short stories , Pantheon draws inspiration (and lampshades it nicely) from everything from Serial Experiments Lain and Ghost in the Shell to more American fare like The Matrix and Blade Runner. There are works of science fiction that are obviously dumb and uninterested in in anything but aesthetic; this is, I'd say, the exact opposite. It is very interested in exploring ideas and themes, and doing so in a way that doesn't feel laborious (like parts of the aforementioned Lain can) nor facile (like Devs, which shares a lot of DNA with this show). Characters are written and voiced extremely well; the show deftly balances the Really Big Ideas it has about where the world might be going and the very real personalities of the folks impacted by it.

You should watch Pantheon; very few people have. It was released on AMC+, a streaming service that I did not even know existed, and then moved to Netflix a few years later. Perhaps if Netflix was the original distributor, this would have been the kind of smash hit that, say, Invincible became; it certainly seems poised to be embedded deep within the zeitgeist, and yet it toils away in cult-classic obscurity.

We live in a weird time, content-wise. There was a brief five-year period in which almost every media corporation was happy to throw unfathomable amounts of capital at creators of all repute under the vague promise of "amassing a catalog", and that was immediately followed by our current era where these media corporations are outright incentivized to completely hide some of their work so as to declare it a loss and harvest some tax breaks. I suspect there are dozens of Pantheons flitting around, buoyed by barely-there licensing deals, glittering gems waiting to be discovered and preserved.

Buttondown's core application is a Django app, and a fairly long-lived one at that — it was, until recently, sporting around seven hundred migration files (five hundred of which were in emails, the "main" module of the app). An engineer pointed out that the majority of our five minute backend test suite was spent not even running the tests but just setting up the database and running all of these migrations in parallel.

I had been procrastinating squashing migrations for a while; the last time I did so was around two years ago, when I was being careful to the point of agony by using the official squash tooling offered by Django. Django's official squashing mechanism is clever, but tends to fall down when you have cross-module dependencies, and I lost an entire afternoon to trying to massage things into a workable state.

This time, I went with a different tactic: just delete the damn things and start over. (This is something that is inconsiderate if you have lots of folks working on the codebase or you're letting folks self-host the codebase; neither of these apply to us.)

rm rf **/migrations/* worked well for speeding up the test suite, but it was insufficient for actually handling things in production. For this, I borrowed a snippet from django-zero-migrations (a library around essentially the same concept):

from django.core.management import call_command

from django.db.migrations.recorder import MigrationRecorder

MigrationRecorder.Migration.objects.all().delete()

call_command("migrate", fake=True)

And voila. No fuss, no downtime. Deployments are faster; CI is much faster; the codebase is 24K lines lighter. There was no second shoe.

If you were like me 24 hours ago, trying to find some vague permission from a stranger to do this the janky way: consider the permission granted. Just take a snapshot of your database beforehand just in case, and rimraf away.

Humane Inc. started in 2018; it raised around $250M over five years before coming out of stealth mode with an AI pin that people did not like very much, and today they announced their sale (or, to be specific, the sale of their patent library) to HP for $116M.

Here is a hype video from July 2022, over a year before they ever announced — let alone released! — a product.

I don't think we draw many interesting lessons from Humane. They feel like a relic from a younger, more Juicero-drenched era: even while they were in stealth mode there was an obvious perfume of vaporwave about them, and I think there's nothing inherently wrong with taking big, ambitious, VC-subsidized swings at gnarly problems that don't quite pan out.

The two relatively novel things that come to mind are:

- "Huge amounts of capital" is a good way to finance infrastructure, and a poor way to finance design.

- Any company that releases a $700 product for consumers and then neuters it with two-weeks' notice for non-existential reasons is, in a meaningful way, evil.

I have a soft spot for these sort of twist-within-a-twist-within-a-twist films, both at a certain intellectual level and at a visceral "oho, they tricked me!" level. It is fun to be hoodwinked by a magician who knows his audience and knows sleight-of-hand.

Last year I watched two of Mamet's entries into this canon — The Spanish Prisoner and House of Games — and of the former (which I liked more of the two) I wrote:

The only fault I can assign it is that it lacks any sort of emotional resonance, which sounds like a more biting criticism than I mean it to be.

The Spanish Prisoner was a perfectly-constructed puzzle of a film that took a brilliant plot (with every final-act twist feeling earned) and added a dagger-sharp script and little else, accomplishing exactly what it set out to do and little else.

Wild Things, on the other hand, feels like a funhouse mirror of the same general thesis. There is no precision, no brilliant script, no asexual cynicism: it is pure, sweaty, roiling id, it is Matt Dillon writing SEXCRIMES on a chalkboard, it is Neve Campbell making out with Denise Richards , it is not thinking about anything too hard.

The reveal of the initial con — Matt and Denise were in on it from the start! — is a great little twist, and I think a more succesful version of this film could have stopped there with the hijinks and then proceed to a more conventional answer to "okay, what happens next?". It is the hubris of the successive twists — the penultimate one, of Kevin Bacon secretly being on it too from the start — that I think takes this film out of its trappings and forces you to realize just how harebrained the entire enterprise is.

And this is a shame, because there are successful moments. The film sells the setting — swampy, overhumid Florida — extremely well, and individual performances (in particular Bill Murray in an early "caricature of a caricature"-type role) work well. But the worst thing a film can do is beggar belief, and Wild Things refuses to let you take it seriously.

In retrospect, the early 2010s era of UCB/Second City-dominated comedy will go down as my favorite, and one that seems impossible to reproduce — the joy in all of these various productions (Bajillion Dollar Properties et al) is that everyone seemed so game to hop in for a couple scenes, and so you'd end up with things like this movie, where the call sheet reads pornographic a decade later: Paul Rudd, Amy Poehler, Cobie Smulders, Max Greenfield, Bill Hader, Ellie Kemper, Jason Mantzoukas, Ed Helms, Kenan Thompson, Adam Scott, the list goes on. It is hard to imagine this existing today — comedy has become a little too Moneyball'd, and Paul Rudd's stardom (and scheduling demands) make it much harder to imagine him dropping in for a week of shooting this kind of entertaining-but-middling fare.

I don't mean to say that this movie is "bad" when I say it's middling — this is, in fact, the kind of thing that I wish there was more of! It's silly, inconsequential, and with enough hits to justify its existence. David Wain's brand of post-post-ironic absurdity always treads the line of being too much for me, and here I think (in no small part because I am so fond of both the troupe and the source material) he nails it. This is a great way to spend eighty minutes; it is not my favorite comedy in the world, but I laughed and was left smiling.

Matthieu asks:

Since you do a lot of things (investor, dad, owner of a "small" business, blog writer) I was wondering what you don't do to keep up with this level of commitment. In the same line, it often said that behind one person success, there's a wife/husband that helps to manage those others things.

First, as a broad, abstract answer I point people to Two Big Things, which I’ve found to be broadly Correct:

Some people try to “have it all.” Men and women both. But it’s never true. At most two can function well; the rest do not. More often, there’s just one that receives the majority of the energy, and the rest suffers.

The personal answer I give is going to be out-of-date: the truth is, I’ve experienced fatherhood for around five months and I’ve experienced “fatherhood while trying to also ‘do work’” for less than half that time, and I don’t think any in medias res description is going to be accurate, let alone useful. (If I were to try to put it into words right now, it’d probably be something like: I’m up for eighteen hours a day, six of those are spent on Lucy duty, eight of those are spent on Work, two of those are spent on keeping the house and family in order, one of them is spent lifting, and one is spent on Telemachus.)

The pre-Lucy answer is probably more useful, even if its now firmly entrenched in the past. When Haley and I talked about what my life as an independent technologist would look like, we settled on some ground rules, unimpeachable clauses in my contract not just as a husband and “person who wasn’t an absolute shit to live with”: to have dinner together every night, to take good care of myself (sleep well, eat healthy, work out every day, stay hydrated and sun-touched), to not lose sight of my own luck and providence. Those things were — and are — my topsoil. Everything else is labor — labor by choice, but hard work nonetheless.

A lot goes by the wayside! I am a terrible friend and correspondent these days; I love television and film but saw maybe a dozen movies total this year and only watch what Haley and I can consume during dinner (which in 2024 was all seventeen series of Taskmaster); I travel and experiment and hobby less than I otherwise would.

And exchange, agency: an infinitely flexible schedule (though we define flexibility here to mean “can take off a random day for no reason and go for a lovely walk through Maymont” more than “can throw phone into the ocean for a week and know that the rest of the team will fill in the gaps”); work that even on its most menial days I take full pride and equity.

I would be mortified if you read this (or, indeed, any of my writing) as a paean to Grindset.

I'm proud of what I've built, and the sacrifices it took to build it; I'm grateful for the life it's let me stumble into living, and would happily make the same choices again — knowing now with certainty where they would lead me.

But doing This Kind Of Thing means being in worse shape than I'd like to be, and spending less time with my friends than they deserve. These are reasonable (and temporary) choices, but they are choices nonetheless, and anyone who intimates that sufficiently divine levels of energy and discipline will exculpate you from making them is either a fool or a liar with something to sell.

You don't want to write. You want to find an excuse not to, and I'll just be one more thing to blame if you don't.

Beneath the decidedly seventies surface of Between the Lines — shag hair! a young Jeff Goldblum! sepia pastels! — is a timeless concept: a group of young kids, freshly out of college, have fashioned their identity out of a workplace that is exclusive and a little bit past their prime, and are trying to negotiate their understanding that the aspirational world they envisioned a few years ago is rapidly disappearing.

Perhaps it is my continued retreat into the burnishing embrace of Capital, but earnest interpretation of these characters' love of their work, and of the Back Bay Mainline does not exactly ring true to me. Matthew Monagle wrote in 2019:

The film has countless moments of insight into the struggle of the American journalist, from the staff’s shabby living conditions — the film offers perhaps the most realistic look at big city apartments ever committed to film — to how well-meaning writers navigate the competing interests of truth and financial trendlines

I think it requires a level of charity bordering on incredulity to treat Goldblum's character as someone meaningfully pushing back against the world around him — the film ends, very tellingly, with him parlaying his beat (and a few white lies) into getting a free beer. With the exception of a very young Bruno Kirby (playing a cub reporter) and a Jon Korkes (who runs the paper), nobody in this film is meaningfully interested in journalism; what they're interested in is being A Journalist, and to that effect this film feels warm and resonant, equal parts sympathetic and cynical.

And this, I think, is a more meaningful thesis than to dwell too much on the demise of the alt-weekly. Cultural institutions are virtuous, whether they're formed under the auspices of a company or not — one way to rot such institutions is to cherish their outputs and ignore their inputs.

In the world of advertising, there's no such thing as a lie. There's only expedient exaggeration.

I wrote last week about Family Plot saying that Hitchcock's films feel like they simultaneously invent and master a given genre, and I had North by Northwest (which I watched shortly after watching Family Plot) in mind. What is there not to love about this film? So many of its scenes have been spoofed hundreds of times, and yet it carries with it none of the weight of inauguration: the set pieces are simple and elegant, Grant is at his Cary Grant-iest, and even though you are surprised by none of the twists and turns you are delighted and comforted by them.

It's hard to write much about North by Northwest under delusions of novelty. I think this film — like much of Hitchcock's work, excepting Rear Window — is less interested in truth than it is in enchantment, and it's funny to look retrospectively at considerations of the film as a "paranoid thriller" (which it certainly is!). Compare this with, say, The Parallax View — Grant is rankled but never ruffled, the movie ends with a hug and a tryst in a sleeping car, and the world keeps spinning away with a mystery solved and sinner saved. Hitchcock at his best cannot summon the heights of cynicism and world-weariness that dominated seventies cinema: and as good as the acme of that decade is, North by Northwest ages so well because it knows that, at the end of the day, it's meant to be a romp.

What a start to the year! January was a bit more flat-packed than I think Haley and I would have otherwise liked, but all with great things. (Highlights include: first weekend trip with Lucy, signing a lease on an office, and Third South's annual offsite.)

A roundup of writing from the past month:

Not as much fun writing in January than I had in the past few months; I promise my backlog of topics is longer than ever before.

I had held off on watching Being John Malkovich for a while, mostly because I think I had a pretty good idea of what it was and, while I wanted to watch it at some point, it never felt particularly urgent. (The vision in my head was a sort of Bosch painting filled with John Malkovich, which of course is true of exactly one scene [and perhaps the most indelible and famous of the film.])

Having finally gotten around to watching it, the biggest surprise was just how large the gulf was between my preconception of this thing — a silly art experiment of a movie — and the reality of it, a script and set filled with humor and wit and a thousand different ideas.

John Cusack plays a Nice Guy (not to be confused with a nice guy); Catherine Keener plays what I think we'd recognize today as an evil twin version of a MPDG; Cameron Diaz's (out of type!) performance was, for me, the show-stopper. It is really hard to seriously play a non-serious person: she does it with aplomb, and her arc is the soul and sugar of the movie that rescues it from collapsing into self-indulgence.

The worst thing a piece of comedy can do is, in the process of not taking itself seriously, it forgets to take its characters seriously. This film is deeply funny, and deeply weird, but Kaufman remembers every step of the way that these people are real, that they want things and feel things. (And I'm sure there are a lot of interesting things it's saying at a metatextual level, but I figure I should finish watching Adaptation before I mull on those too heavily.)

I told you about danger, didn't I? First it makes you sick, then when you get through it, it makes you very, very loving.

Every review (contemporary or otherwise) of Family Plot refers to it as a "lesser work" of Hitchcock's, and my reaction both immediately after watching it and now, a few weeks later, is largely the same: it is no North by Northwest or Rear Window, both of which felt so obviously like they had not only invented a genre but mastered it in the making.

Family Plot, if it can be said to have invented anything, feels like a spiritual predecessor to the Coen brothers' early ouevre: tragicomic, centered around a crime and a misunderstanding, pitting the foolish and naive yet goodhearted against the evil yet fallible. Karen Black and Bruce Dern could have been the protagonists of Fargo; their performance, both individuals and as a team, is lovely (as is William Devane, who I'd never seen in this phase of his career.)

It's a fun film, but once the puzzle box of "how are all of these people going to run into each other?" is solved, it feels a little bit like the back third of a Sudoku puzzle: satisfying in its own right, sure, but not something you'll look back on with amity and awe.

If you want to get schmaltzy , though, I think there's a certain symbolic richness in Hitchcock's final directorial scene being as cute as this film's ending, a literal wink to the audience. You know, he says, that I hid this diamond myself: but I kept you guessing, and I kept your faith alive for as long as I saw fit.

Of course Primal Fear's legacy is (justifiably) dominated by Edward Norton's insanely talented debut performance , but what I came away most surprised by was the richness and texture of Gere and Lilley's performances. I have nothing against either of them as actors but — there's a certain We Have George Clooney At Home quality to most of Gere's work for instance, a sense of them being more of a well-executed archetype than a singular presence.

And that, I think, is the lens through which it's most fun to experience Primal Fear: a sort of gradual Dante-esque perversion of what feels like a standard SVU episode into hell: hell of the heart, hell of the soul. The hotshot amoral defender is revealed to be, in fact, a paladin succored and ruined by forces more wicked and clever than he thought extant; the wisecracking prosecutor discovers that she sacrificed everything and earns nothing. The two performances could not have been portrayed by "bigger" actors; the strength of their arc relies on the fact that you think you know who these people are from the very first time they step onto the screen.

Guillermo posted this recently:

What you name your product matters more than people give it credit. It's your first and most universal UI to the world. Designing a good name requires multi-dimensional thinking and is full of edge cases, much like designing software.

I first will give credit where credit is due: I spent the first few years thinking "vercel" was phonetically interchangable with "volcel" and therefore fairly irredeemable as a name, but I've since come around to the name a bit as being (and I do not mean this snarkily or negatively!) generically futuristic, like the name of an amoral corporation in a Philip K. Dick novel.

A few folks ask every year where the name for Buttondown came from. The answer is unexciting:

- Its killer feature was Markdown support, so I was trying to find a useful way to play off of that.

- "Buttondown" evokes, at least for me, the scent and touch of a well-worn OCBD, and that kind of timeless bourgeois aesthetic was what I was going for with the general branding.

It was, in retrospect, a good-but-not-great name with two flaws:

- It's a common term. Setting Google Alerts (et al) for "buttondown" meant a lot of menswear stuff and not a lot of email stuff.

- Because it's a common term, the .com was an expensive purchase (see Notes on buttondown.com for more on that).

We will probably never change the name. It's hard for me to imagine the ROI on a total rebrand like that ever justifying its own cost, and I have a soft spot for it even after all of these years. But all of this is to say: I don't know of any projects that have failed or succeeded because of a name. I would just try to avoid any obvious issues, and follow Seth's advice from 2003.

Dear Dad — you always told me that an honest man has nothing to fear, so I'm trying my best not to be afraid.

I floated the idea of a TBS canon when writing about Planes, Trains, and Automobiles — a sort of normcore cohort of good-but-not-fine movies whose pop cultural imprint has somehow never quite convinced me of their viewership, and right after doing so a friend wrote in suggesting Catch Me If You Can as a worthy entrant. And, indeed, I know the movie poster extremely well despite having never seen it.

It's a stretch to draw too many parallels between the two films (both now-rote plots largely carried by the sheer charisma of the leads and their winning chemistry, et cetera), but whereas Planes, Trains, and Automobiles had to win me over from my default position of "road trip movies are kind of boring" — here I was in from minute one, completely sold into this universe. Spielberg is at the peak of his powers: Christopher Walken delivers an absolute knockout performance with every second of his limited screen time, and DiCaprio — always an actor who felt like he was in conversation with a version of himself whom I had never seen — well, I get it now, this is the guy, this is the urtext which Killers of the Flower Moon destroys.

The movie does such a good job of selling you in its first two thirds with a sense of verve and playfulness (the John Williams score!) that the few moments of somberness hit you with affectation. The two truly dark scenes: DiCaprio's character meeting his father at a bar and understanding that the house of cards around which he's built his life has fallen, and then him peering in at his mother's house to understand there is no going back — do not feel cheap, do not feel schmaltzy. There is an emotional texture to this film that beggars comparison to Wolf of Wall Street: once you stop moving, once you catch your breath and the world settles around you, you realize you are alone, and so you better keep running.

(The kicker, the perfect kicker: the person about whom this story is ostensibly written made it all up. A perfectly meta-textual long con!)

Before reaching cruising altitude the plane sliced through a thick layer of cloud and for a few seconds there was nothing but white outside the window and I couldn’t help feeling, as we cut through the ephemeral landscape slowly thinning and dispersing and branching out in a thousand unmappable directions, that this moment had been prepared especially for me, some kind of aerial requiem held in honor of the city I was leaving behind, and in the end, I remember thinking a few minutes later as the Lufthansa stewardess rattled down the aisle with her drinks cart, there was little difference between clouds and shadows and other phenomena given shape by the human imagination.

It is sometimes obvious when a newer author organizes an entire book around a single paragraph or image, as if they've decided on the thesis they wish to present and spend the intervening pages gathering supporting evidence to bolster its strength. This little bon mot — clouds are like shadows! history is pareidolia! — quoted above as the final paragraph of Book of Clouds lampposts the entire novel, as if Aridjis' discursions on lights and fogs were insufficiently subtle.

There are good moments in this book, in much the same way that chatting with a clever but self-absorbed friend can still leave you with a smile on your face. Aridjis has a prose that manages to be both humane and detached, and her flirtations with the world of magical realism are not exactly Marquez-tier, but they're still interesting.

But — as is often the case with these sort of pseudo-autofictional debuts that have become increasingly common over the past two decades — wit and skill makes for a great garnish but a poor main course. This book is a pleasant way to spend a few hours, but it lacks substance and insight: it is, in fact, quite akin to spending two years in Berlin in your twenties and flying back home to consider yourself a changed person. You have nicer shoes; you can chat with your friends about your favorite spots on the Hermannstraße; your eyes are thinner and sharper. But you're still you; you haven't changed enough, you haven't changed at all.